PoseNormNet: Identity-preserved Posture Normalization of 3D Body Scans in Arbitrary Postures

Abstract

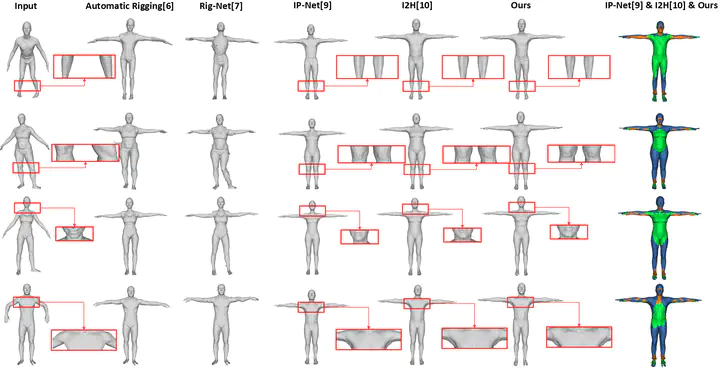

Three-dimensional (3-D) human models accurately represent the shape of the subjects, which is key to many human-centric industrial applications, including fashion design, body biometrics extraction, and computer animation. These tasks usually require a high-fidelity human body mesh in a canonical posture (e.g., “A” pose or “T” pose). Although 3-D scanning technology is fast and popular for acquiring the subject’s body shape, automatically normalizing the posture of scanned bodies is still under-researched. Existing methods highly rely on skeleton-driven animation technologies. However, these methods require carefully designed skeleton and skin weights, which is time-consuming and fails when the initial posture is complicated. In this article, a novel deep learning-based approach, dubbed PoseNormNet, is proposed to automatically normalize the postures of scanned bodies. The proposed algorithm provides strong operability since it does not require any rigging priors and works well for subjects in arbitrary postures. Extensive experimental results on both synthetic and real-world datasets demonstrate that the proposed method achieves state-of-the-art performance in both objective and subjective terms.