Abstract

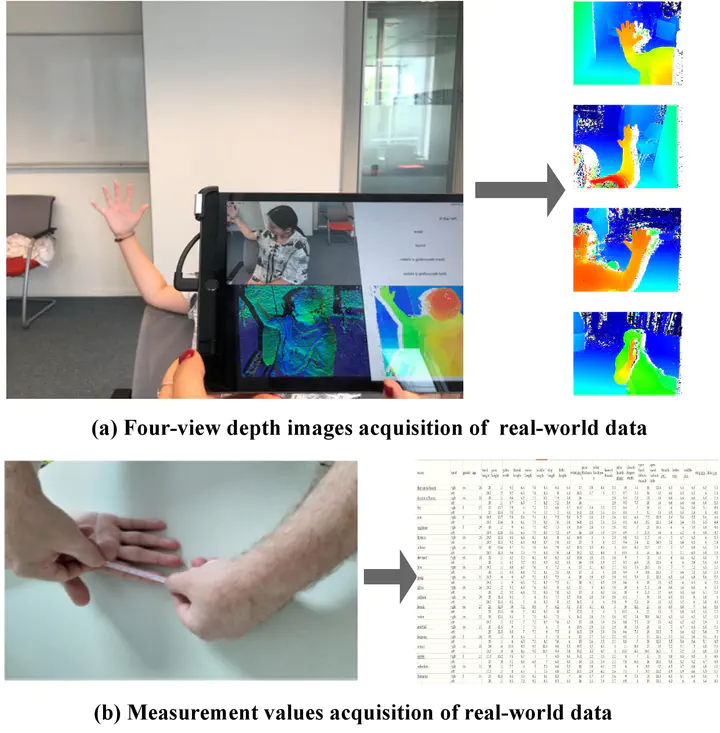

Accurately reconstructing 3-D hand shapes of patients is important for immobilization device customization, artificial limb generation, and hand disease diagnosis. Traditional 3-D hand scanning requires multiple scans taken around the hand with a 3-D scanning device. These methods require the patients to keep an open-palm posture during scanning, which is painful or even impossible for patients with impaired hand functions. Once multi-view partial point clouds are collected, expensive post-processing is necessary to generate a high-fidelity hand shape. To address these limitations, we propose a novel deep-learning method dubbed PatientHandNet to reconstruct high-fidelity hand shapes in a canonical open-palm pose from multiple-depth images acquired with a single-depth camera.

Publication

IEEE Transactions on Instrumentation and Measurement

Senior Lecturer (Associate Professor)

Pengpeng Hu is currently a Senior Lecturer (Associate Professor) with The University of Manchester. His research interests include biometrics, geometric deep learning, 3D human body reconstruction, point cloud processing, and vision-based measurement. He serves as an Associate Editor for IEEE Transactions on Neural Networks and Learning Systems, IEEE Transactions on Automation Science and Engineering, and Engineering and Mathematics in Medical and Life Sciences, as well as an Academic Editor for PLOS ONE and a member of the editorial board for Scientific Reports. He is also the Programme Chair for the 25th UK Workshop on Computational Intelligence (UKCI 2026) and an Area Chair for the 35th British Machine Vision Conference (BMVC 2024). He is the recipient of the Emerald Literati Award for an outstanding paper in 2019.