Abstract

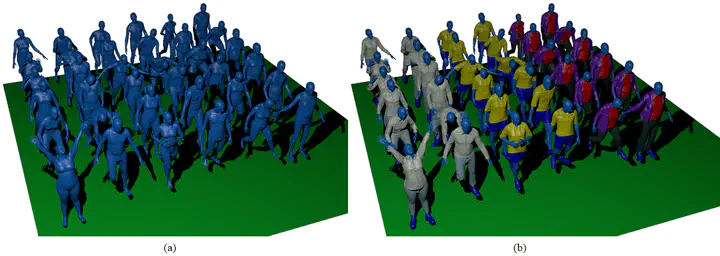

Estimating the 3-D human body shape and pose under clothing is important for many applications, including virtual try-on, noncontact body measurement, and avatar creation for virtual reality. Existing body shape estimation methods formulate this task as an optimization problem by fitting a parametric body model to a single dressed-human scan or a sequence of dressed-human meshes for a better accuracy. This is impractical for many applications that require fast acquisition, such as gaming and virtual try-on due to the expensive computation. In this article, we propose the first learning-based approach to estimate the human body shape under clothing from a single dressed-human scan, dubbed Body PointNet. The proposed Body PointNet operates directly on raw point clouds and predicts the undressed body in a coarse-to-fine manner. Due to the nature of the data—aligned paired dressed scans and undressed bodies; and genus-0 manifold meshes (i.e., single-layer surfaces)—we face a major challenge of lacking training data. To address this challenge, we propose a novel method to synthesize the dressed-human pseudoscans and corresponding ground truth bodies. A new large-scale dataset, dubbed body under virtual garments, is presented, employed for the learning task of body shape estimation from 3-D dressed-human scans. Comprehensive evaluations show that the proposed Body PointNet outperforms the state-of-the-art methods in terms of both accuracy and running time.