Abstract

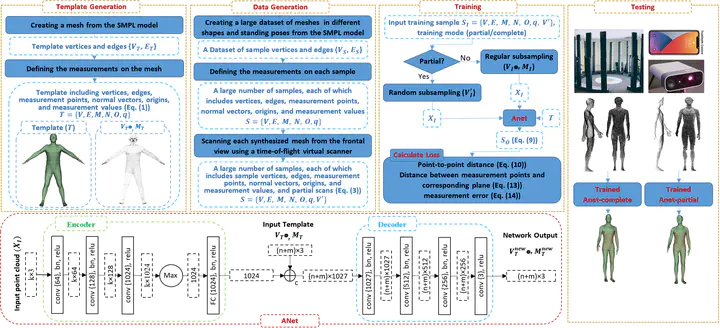

3D Anthropometric measurement extraction is of paramount importance for several applications such as clothing design, online garment shopping, and medical diagnosis, to name a few. State-of-the-art 3D anthropometric measurement extraction methods estimate the measurements either through some landmarks found on the input scan or by fitting a template to the input scan using optimization-based techniques. Finding landmarks is very sensitive to noise and missing data. Template-based methods address this problem, but the employed optimization-based template fitting algorithms are computationally very complex and time-consuming. To address the limitations of existing methods, we propose a deep neural network architecture which fits a template to the input scan and outputs the reconstructed body as well as the corresponding measurements. Unlike existing template-based anthropocentric measurement extraction methods, the proposed approach does not need to transfer and refine the measurements from the template to the deformed template, thereby being faster and more accurate. A novel loss function, especially developed for 3D anthropometric measurement extraction is introduced. Additionally, two large datasets of complete and partial front-facing scans are proposed and used in training. This results in two models, dubbed Anet-complete and Anet-partial , which extract the body measurements from complete and partial front-facing scans, respectively. Experimental results on synthesized data as well as on real 3D scans captured by a photogrammetry-based scanner, an Azure Kinect sensor, and the very recent TrueDepth camera system demonstrate that the proposed approach systematically outperforms the state-of-the-art methods in terms of accuracy and robustness.