Abstract

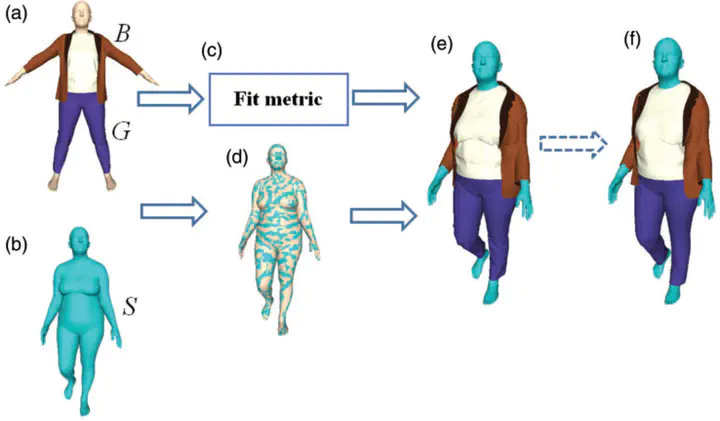

Virtual try-on synthesizes garments for the target bodies in 2D/3D domains. Even though existing virtual try-on methods focus on redressing garments, the virtual try-on hair, shoes and wearable accessories are still under-reached. In this paper, we present the first general method for virtual try-ons that is fully automatic and suitable for many items including garments, hair, shoes, watches, necklaces, hats, and so on. Starting with the pre-defined wearable items on a reference human body model, an automatic method is proposed to deform the reference body mesh to fit a target body for obtaining dense triangle correspondences. Then, an improved fit metric is used to represent the interaction between wearable items and the body. For the next step, with the help of triangle correspondences and the fit metric, the wearable items can be fast and efficiently inferred by the shape and posture of the targeted body. Extensive experimental results show that, besides automation and efficiency, the proposed method can be easily extended to implement the dynamic try-on by applying rigging and importing motion capture data, being able to handle both tight and loose garments, and even multi-layer clothing.