W2H-Net: Fast Prediction of Waist-to-Hip Ratio from Single Partial Dressed Body Scans in Arbitrary Postures via Deep Learning

Abstract

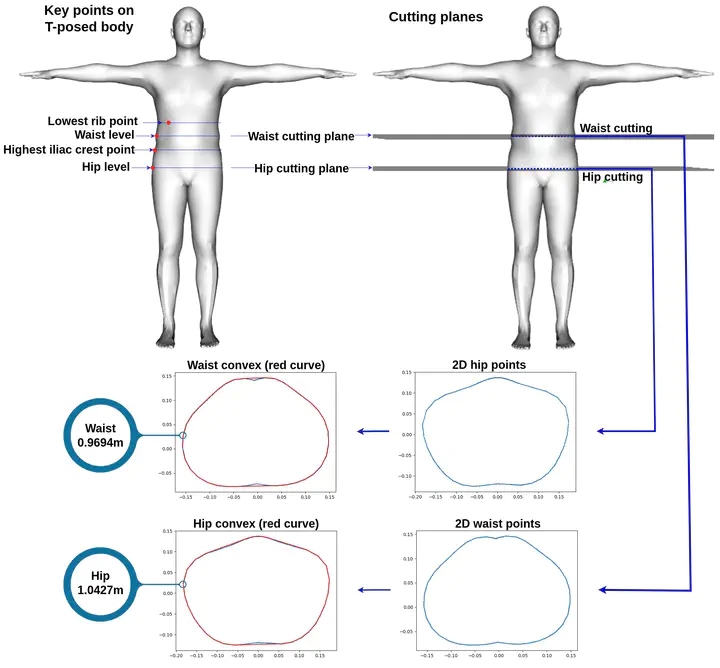

The Waist-to-Hip Ratio (WHR) is an important indicator for health risk prediction, body fat distribution, body shape analysis, and physical fitness analysis. The conventional approach for obtaining the WHR entails manual measurement, which necessitates experienced anthropometrists to measure the waist and hip circumferences of a subject wearing tight clothing in a predetermined posture, and subsequently calculate the ratio based on the acquired measurements. WHR errors may be accumulated due to the anthropometrist’s subjectivity, as well as the person’s pose and attire during the measurement process. Non-contact anthropometric measurements using 3D scanning technology have shown promise in providing higher accuracy and faster measurement compared to traditional methods. However, they require complete undressed body scans as input, which is not always available. In this paper, we proposed, to the best of our knowledge, the first deep learning-based algorithm, dubbed W2H-Net, to predict the WHR directly from single partial dressed body scans in arbitrary postures. W2H-Net introduces a novel framework called Focus-Net to improve learning accuracy by selectively focusing on parts that require attention. W2H-Net provides a flexible, cost-effective, and privacy-preserving way to obtain accurate WHR measurements, which are crucial for predicting health risks associated with central obesity. Extensive experimental results can demonstrate the superiority of the proposed method.