Abstract

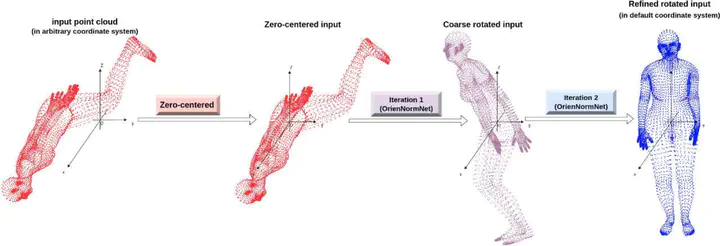

3D human body models are widely used in human-centric industrial applications, including healthcare, fashion design, body biometrics extraction, and computer animation. Prior to processing and analyzing body models, it is significantly important to rotate them to the same orientation. For instance, body measurement systems and virtual try-on systems usually assume the orientation of the body is known. These systems will output incorrect results or report errors without the correct orientation information. Unfortunately, the orientations of scanned bodies are different in practice since they are in different coordinate systems due to the setup variations of scanners. To automatically normalize the orientations of bodies is a challenging task due to the presence of pose variations, noises, and holes during 3D body scanning. In this study, we propose a novel deep learning-based method dubbed OrienNormNet for normalizing the orientation of 3D body models. As shown in Figure 1 and Figure 2, OrienNormNet directly consumes raw point clouds or mesh vertices, and it is applied in an iterative manner. First, the centroids of point clouds are translated to the origin to obtain zero-centered point clouds. Next, OrienNormNet consumes zero-centered raw point clouds and outputs coarse axis rotation angles. Finally, OrienNormNet takes the coarsely rotated point clouds from the previous processing as an updated input and outputs the refined axis rotation angles. By applying the obtained coarse and fine axis rotation angles, thousands of bodies can be adjusted to the same orientation in a few seconds. Experimental results based on synthetic datasets as well as real-world datasets validated the effectiveness of our idea.