Abstract

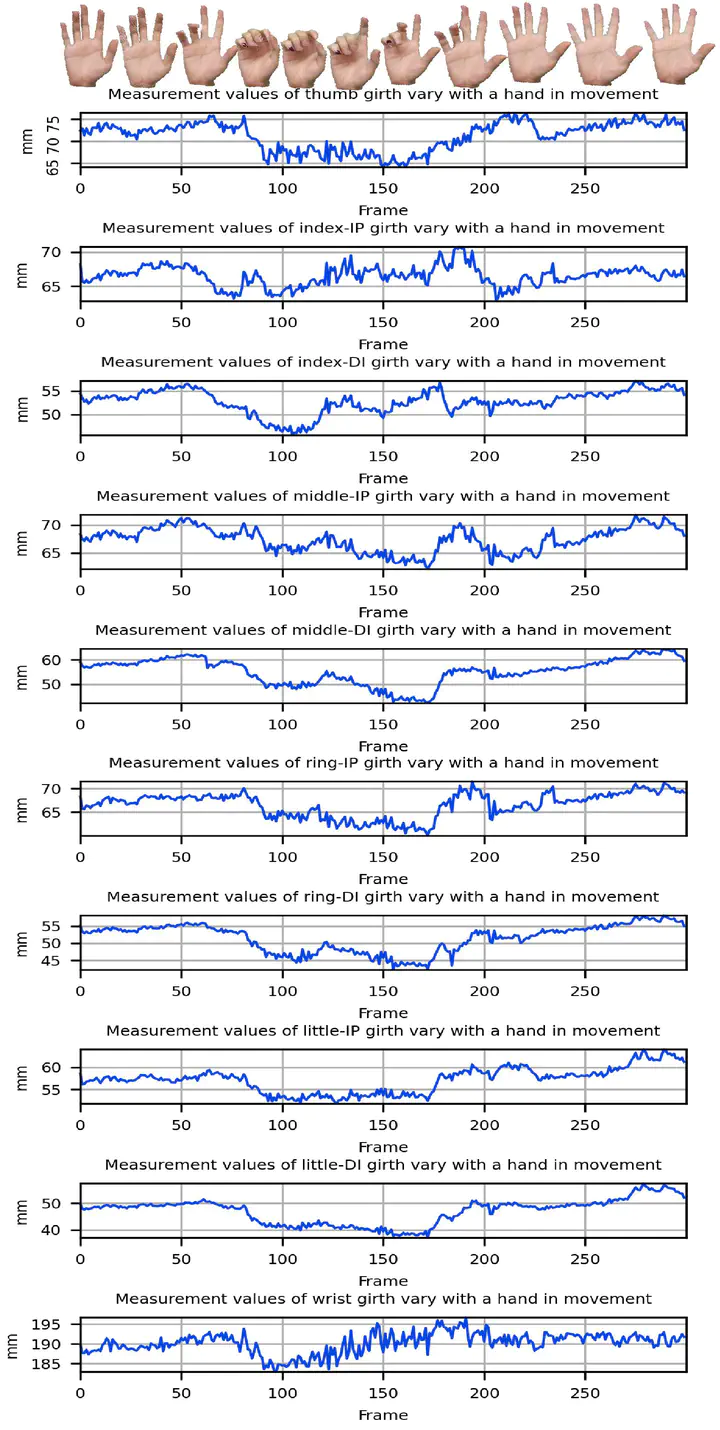

Hand measurement is vital for hand-centric applications such as glove design, immobilization design, protective gear design, to name a few. Vision-based methods have been previously proposed but are limited in their ability to only extract hand dimensions in a static and standardized posture (open-palm hand). However, dynamic hand measurements should be considered when designing these wearable products since the interaction between hands and products cannot be ignored. Unfortunately, none of the existing methods are designed for measuring dynamic hands. To address this problem, we propose a user-friendly and fast method dubbed Measure4DHand, which automatically extracts dynamic hand measurements from a sequence of depth images captured by a single depth camera. Firstly, the ten dimensions of the hand are defined. Secondly, a deep neural network is developed to predict landmark sequences for the ten dimensions from partial point cloud sequences. Finally, a method is designed to calculate dimension values from landmark sequences. A novel synthetic dataset consisting of 234K hands in various shapes and poses, along with their corresponding ground truth landmarks, is proposed for training the proposed methods. The experiment based on real-world data captured by a Kinect illustrates the evolution of the ten dimensions during hand movement, while the mean ranges of variation are also reported, providing valuable information for the hand wearable product design.