Abstract

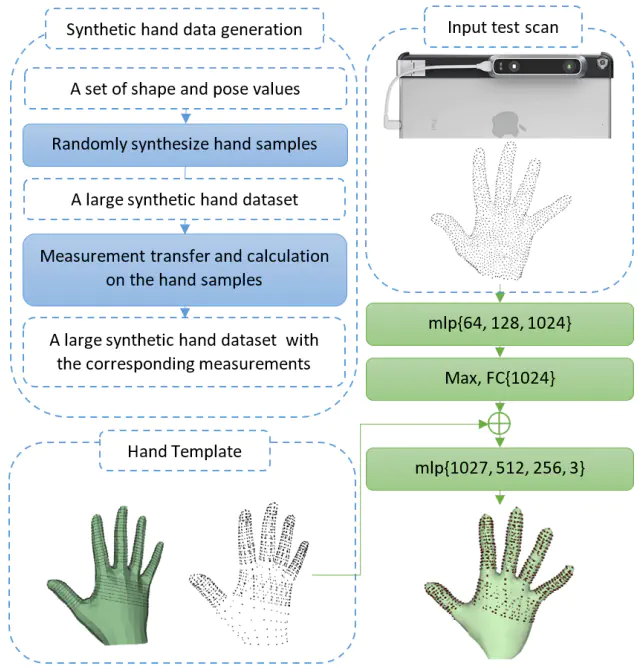

Recent advancements in 3D scanning technologies enable us to acquire the hand geometry represented as a three-dimensional point cloud. Providing accurate 3D hand scanning and accurately extracting its biometrics are of crucial importance for a number of applications in medical sciences, fashion industry, augmented and virtual reality (AR/VR). Traditional methods for hand measurement extraction require manual intervention using a measuring tape, which is time-consuming and highly dependent on the operator’s expertise. In this paper, we propose, to the best of our knowledge, the first deep neural network for automatic hand measurement extraction from a single 3D scan (H-Net). The proposed network follows an encoder-decoder architecture design, taking a point cloud of the hand as input and outputting the reconstructed hand mesh as well as the corresponding measurement values. In order to train the proposed deep model, a novel synthetic dataset of hands in various shapes and poses and their corresponding measurements is proposed. Experimental results on both synthetic data and real scans captured by Occipital Mark I structure sensor demonstrate that the proposed method outperforms the state-of-the-art methods in terms of accuracy and speed.